I disagree with answers stating that UTF-8 brings too much overhead. I went out and researched how many bit you can squeeze into valid UTF-8 bytes. I ran into the problem with encoding as much information into JSON string, meaning UTF-8 without control characters, backslash and quotes. The short answer would be: No, there still isn't. If you know that you have more zeros than ones in your source, then you might want to map the 7-bit codes to the start of the space so that it is more likely that you can use a 7-bit code. You will be generating 6-7 bits of output each iteration.įor uniformly distributed data I think this is optimal. Otherwise shift the corresponding 7 bits onto your output.

Binary to text encoding code#

If the raw code is less than 0100001 then shift the corresponding 6 bits onto your output. To decode, accept a byte and translate to raw output code. You will be shifting 6 or 7 bits of input each iteration. Then look up the corresponding 7-bit output code, translate to fit in the output space and send. If those six bits are greater or equal to 100001 then shift another bit.

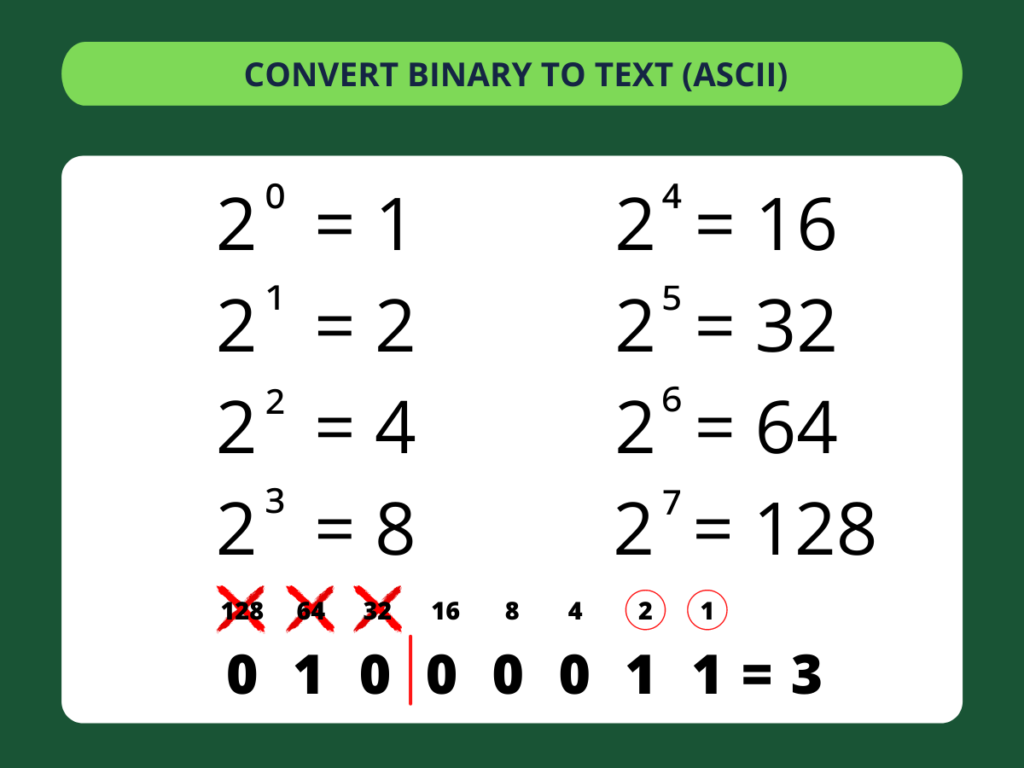

To encode, first shift off 6 bits of input. You can start 2 prefixes with a 6-bit symbol, or one with a 7-bit symbol.) If we have A 6-bit symbols and B 7-bit symbols, then:ĢA+B=128 (total number of 7-bit prefixes that can be made. Now: some of these symbols will represent 6 bits, and some will represent 7 bits. (If the encodings are not as assumed, then use a lookup table to translate between our intermediate 0.N-1 representation and what you actually send and receive.)Īssume 95 characters in the alphabet. If you don't need delimeters then you can transmit 6.98 bits per character.Ī general solution to this problem: assume an alphabet of N characters whose binary encodings are 0 to N-1. I'd treat UTF-8 as a constrained channel that passes 0x01-0x7F, or 126 unique characters. But anything above 0x80 expands to multiple bytes in UTF-8.

I'm not sure if 0x00 is legal likely not. It is able to transport values 0x01-0x7F with only 14% overhead. UTF-8 is not a good transport for arbitrarily encoded binary data.

Binary to text encoding how to#

:-) How to do so is left as an exercise for the reader. To take an extremely stupid example: if your channel passes all 256 characters without issues, and you don't need any separators, then you can write a trivial transform that achieves 100% efficiency. So the choice of an encoding really depends on your requirements. It's easy to define a transform that comes pretty close.īut: what if you need a separator character? Now you only have 94 characters, etc. Each character can theoretically encode log2(95) ~= 6.57 bits per character. you may require pure ASCII text, whose printable characters range from 0x20-0x7E.

Now: why do you need text? Typically, it's because the communication channel does not pass through all characters equally. We can then assume that the distribution of 1/0 or individual bytes is more or less random. This really depends on the nature of the binary data, and the constraints that "text" places on your output.įirst off, if your binary data is not compressed, try compressing before encoding.

0 kommentar(er)

0 kommentar(er)